High-speed cameras are playing an increasingly vital role in aerospace testing. Luis Castillo-Tejeda, an aerospace engineer at the US National Institute for Aviation Research in Wichita, Kansas, underscores their importance, saying: “Every single thing that we do, we try to shoot with at least two high-speed cameras.”

In the domain of engine testing high-speed cameras are particularly useful, helping engineers understand the performance of aerospace propulsion systems. The high quality images at extremely high frame rates that high-speed cameras can provide give aerospace engineers with vital information about the behavior of the thrust and combustion processes occurring within engines.

A standard camera records at a frame rate, which “just needs to be fast enough to fool the eye,” says Stephan Trost, CEO at Swiss high-speed camera developer AOS Technologies. “Human perception interprets this as a movie,” he says. But the rate is “too low to observe fast mechanical events that happen in a fraction of a second.

“Technically speaking, the time between two pictures in a 50fps recording is 20 milliseconds,” says Trost. “Such a recording does not show what is in between these images.

“For a 500fps high-speed camera, the time between pictures is two milliseconds. This is a ten-fold increase of information provided and playing back in slow motion the engineer can analyze what is happening in detail.”

Bird strikes

Modern cameras are capable of much higher speeds than 500fps and around 10,000 fps is a common data acquisition speed for the current generation, says Tim Schmidt, vice president at Trilion Quality Systems, a USA-based company providing high-speed camera technology and services to the aerospace industry.

Cameras can be used in different ways during engine tests. Trilion conducts jet engine testing for major aerospace developers including GE, Pratt and Whitney and Honeywell, as well as rocket testing for NASA.

“There are a lot of dynamic events which need to be evaluated, such as checking whether fan blade tips are touching rub strips, which are typically applied every 90° during balance runs,” says Schmidt.

Other tests include testing the effects of bird, ice or rain ingestion on an engine. Projectiles used in these tests can include chickens – sometimes up to five at a time – ballistic gelatin to replicate a bird strike or even drones.

“The interactions with the fan blades are studied,” says Schmidt. “Mount dynamics are evaluated during major tests such as fan blade outs, to see if the mounts snub, reaching their mechanical limits. Deformations of fan casings are also studied.”

For tests that involve an impact event like a bird ingestion, the camera footage will be used to answer a range of questions. Schmidt says: “Did the impacts of projectiles hit exactly where expected? What were the peak deflections that occurred? Did gaps open up between panels? Did cracks occur?

“Also – what was the elapsed time between impact and maximum deflections? And do the test results match the finite element model predictions?”

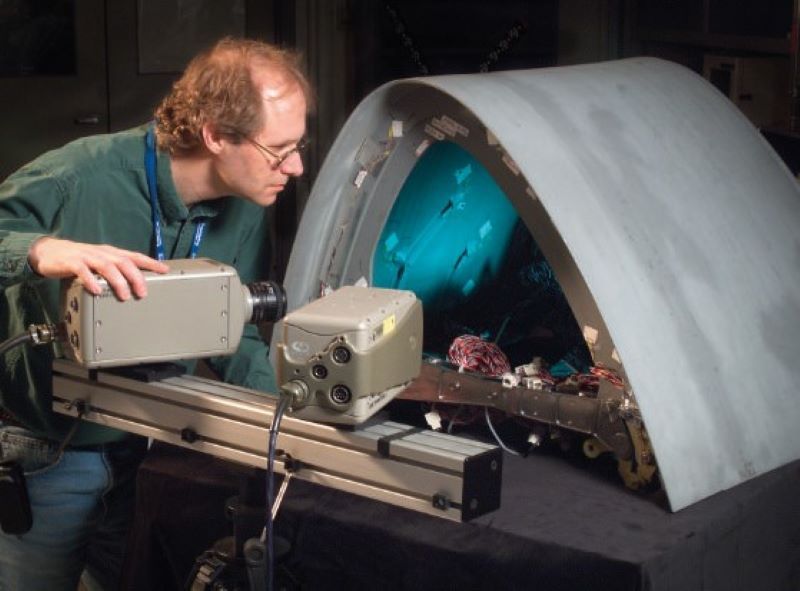

Interior view

Besides collision events, cameras are also used more broadly to examine the combustion process in an engine. Schmidt for example, has in the past been asked by aerospace developers to image Mach diamonds, the formation of standing wave patterns that appear in the supersonic exhaust plume of an aircraft’s propulsion system. To do this, he used Schlieren photography, a technique developed in the nineteenth century to study supersonic motion that allows the camera to visualize local flow behavior.

The capabilities of today’s generation of cameras are extraordinary. In his test set-ups for the NIAR, Castillo-Tejeda uses cameras “capable of going up to almost half a million frames per second.”

NIAR also uses high-speed cameras with much more modest frame rates of up to 2,000 fps. “You can imagine that there is a huge cost difference between them,” says Castillo-Tejeda.

The high frame rates are matched by extremely high resolution capacities, with the highest speed cameras capable of providing a resolution of one megapixel, which is equivalent to 1,000,000 pixels. “We don’t have the best of the best, but we’re pretty close,” says Castillo-Tejeda.

Faster captures

The best of the best could be a new ultra-fast laser camera developed by a team of physicists led by the University of Gothenburg, Sweden. The camera, which the researchers claim is the world’s fastest single-shot laser camera, uses a laser beam pulse to record events at 12.5 billion fps. This ultra-high-speed frame rate means the camera is capable of capturing combustion events within engines that until now have been impossible to image.

“Today’s cameras are limited to about a million frames per second but in a combustion event a lot of the reactions happen on a nanosecond timescale – that means billions of frames per second,” says the University of Gothenburg’s Yogeshwar Nath Mishra, one of the lead researchers on the project.

Improvements in frame rate and resolution are the two main factors pushing the growing use of high-speed camera technology in aerospace testing, says Trost.

“Higher resolution offers greater spatial detail, while a higher frame rate provides improved temporal resolution,” says Trost. “Both factors contribute to capturing more details, enabling a finer analysis of fast mechanical events.”

Schmidt breaks down this advancement in camera performance, noting that “in 2003, the maximum frame rate available at a resolution of 256 x 256 pixels was 27,000 fps. By 2023, that had increased to 425,000 fps.”

Another major milestone was the advent of digital cameras to replace film, which started in the early 2000s. “This made results available immediately, instead of waiting up to several days for film developing,” Schmidt says.

There have also been important advancements in the dynamic range of cameras, allowing the capture of a broader range of light and dark regions simultaneously and improvements in light sensitivity.

Another key advancement in the past 20 years has been the ability to quantify measurements from high-speed video.

The most common way of doing this is via a technique known as 3D digital image correlation (DIC). This uses two high-speed cameras to measure displacements and strains at thousands of points, mapping the results as color overlays.

By using two or more cameras and calibrating the cameras to each other, engineers can create a 3D model of the test item. “We have software that knows the intrinsic and extrinsic parameters of the cameras, and it’s able to output coordinates,” says Castillo-Tejeda. “All the images we take during the test get fitted into that software, and within the software we are able to make these measurements.”

With so much aerospace testing taking place in simulations, DIC is a valuable way of validating virtual models, says Castillo-Tejeda.

“Around 90% of the physical testing is replicated on a simulation and high-speed cameras provide the data we use to determine how accurate our models are,” says Castillo-Tejeda.

Test setup

The physical arrangement of cameras for testing can vary considerably, depending on whether it is a test of the full engine or a smaller-scale test of an engine component.

“For a destructive test like a fan blade off, a full engine will only be used for the certification test,” says Schmidt. “Otherwise, there will be a lot of rig testing, such as using just the fan and booster section and the containment ring and possibly an inlet.”

For a full engine test it is “not uncommon for there to be about ten

high-speed cameras,” he adds. “There will be one or two stereo pairs for DIC of the containment displacements and strains, three or more front-view cameras, some side-view, some looking forward at the outside of the booster.

“Sometimes cameras or stereo pairs are looking at each of the two mounts. The front-view camera will be assessing things like spinner tip and inlet orbital motions, spool-down dynamics, interactions between projectiles and fan blades.”

The use of multiple cameras also provides a safeguard if a camera fails. This is important because engine tests are expensive to carry out – a full engine test is likely to run to “tens of millions of dollars,” says Schmidt.

The high cost means that there is immense pressure on high-speed camera operators to ensure the image is captured without a hitch.

Schmidt illustrates this with a story once told to him by a fellow expert in the field. “He told me that on his first week on the job, his boss told him that there are many acceptable mistakes but that if he ever does not successfully capture the high-speed video – do not write, do not call, just leave – because you’re fired.”

Capturing space flight history

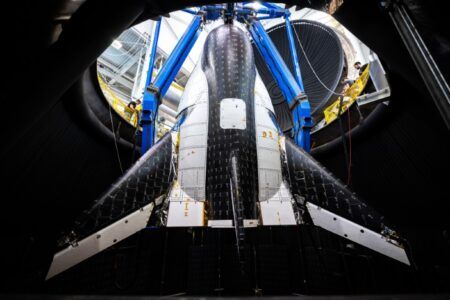

Late last year NASA sent its Orion spacecraft on a lunar flyby, the first time the space agency had visited the Moon in five decades.

That historic mission, which included Orion’s launch in mid-November as the payload of the Artemis I rocket followed by two flybys of the Moon and a splash down in early December in the Pacific Ocean, was captured with an array of 24 cameras on the rocket and spacecraft.

As well as capturing incredible close-up images of the Earth and the Moon from space, the cameras also played an important role in helping engineers from NASA monitor the spacecraft during its historic mission.

Four cameras attached to Orion’s solar array wings on the service module offered outside views of the spacecraft. They were also responsible for images released of Orion with the Earth or Moon in the background.

There were also two high-speed cameras used during the mission. These cameras, which were mounted externally, were used to monitor the opening of the parachute during the spacecraft’s re-entry to Earth.

Another external camera mounted externally was used to capture the take-off, providing the “rocket cam” view the public commonly sees during launches.