One of the main aims of simulation and modeling in aerospace is to accelerate the development of systems and reduce development costs. The development of simulation technologies in all domains allows one to believe that numerical simulations may one day be all that is needed to develop the systems of the future.

This viewpoint assumes that when all aspects of multi-physics models are perfectly mastered, simulation will give perfect satisfaction, particularly to technical management teams.

But the path toward this hypothetical situation requires a paradigm shift in the way engineers think about numerical simulation. In the future, simulation must be considered not an indicator, but a decision-making tool. To achieve this, the major players in simulation and their users now recognize the need to work with the concept of simulation credibility.

Proving validity

It is useful to consider what the credibility of a simulation is and how to evaluate it in a general framework. Credibility refers to the ability of the modeler to prove that his model is predictive and to justify its validity.

Credibility should not be confused with the ability to verify that the model solves the mathematical equation. To evaluate this, many technical managers adapted well-known maturity scales such as TRL (Technology Readiness Level).

These methods are most often adapted to the development of systems and are of limited usefulness when evaluating the maturity of a simulation model. Since the early 2000s, some research teams, including at NASA and the US Department of Energy have been working on the development of maturity models dedicated to the practice of digital simulation.

Maturity models aim to facilitate exhaustive and precise evaluations of the capacities of an organization to carry out a relevant simulation according to several criteria. These criteria could include descriptions of the structure’s constitutive parameters, geometry and boundary conditions, and the model’s capacities to take into account uncertainties for example.

Simulation for certification

Ultimately, the objective of maturity models is to predict the uncertainties related to the simulation model and contribute to its acceptability.

In aerospace, people such as technical management or certification authorities need to be convinced of a digital model’s credibility. These people could one day be called upon to give their opinion on partially digital certifications.

It is therefore a high priority for technical decision-makers to progressively bring simulation tools up to this credibility level. Major aircraft manufacturers, who are now pushing for digital certification at the aircraft level.

The need for evaluation

To come back to the maturity of models, taking the case of structural mechanics that we know best, some elements are now well mastered – for example verification with mesh convergence and resolution of behavior laws.

Other elements are more systematically ignored, such as the question of the fine knowledge of the boundary conditions applied to the system, the validation tools and their impact on the obtained result, or the uncertainties related to the various unknowns of the simulation problem.

At EikoSim, we most often see clients who have acquired a certain confidence in their modeling for lack of proof to the contrary. They have often have not had the time or the means to apply a systematic approach to evaluate the quality of their model.

An example is boundary conditions. It is very common to model the links between two systems in too simple a way. This can introduce a strong difference in stiffness of the overall system.

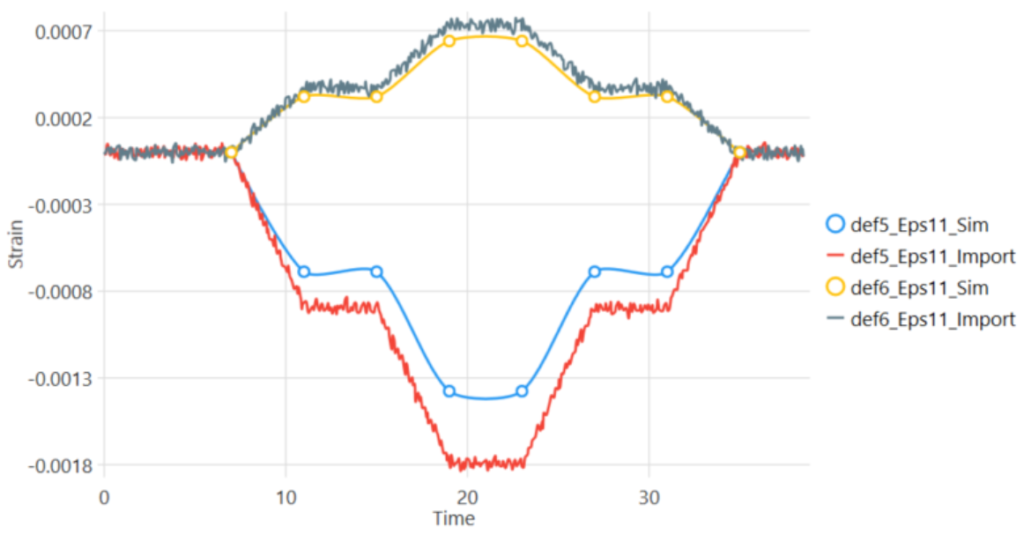

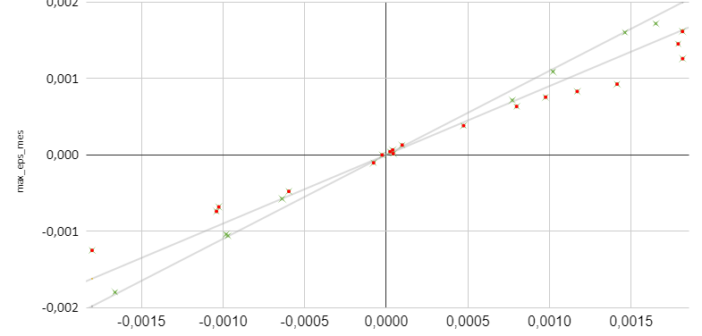

As far as the validation tools themselves are concerned, i.e. data comparison, it is difficult today for engineers to make a real estimate of the errors linked to the use of these tools.

One thinks of the calculation of virtual strain gauges for example, which is very dependent on the method used, or of the various reprojection errors caused using innovative solutions such as image correlation, laser vibrometry or fiber optics.

Even putting these factors aside, it is important to note that most of these operations are performed in tools not adapted to a systematic approach, such as Excel spreadsheet in the first place or homemade scripts in the best of cases. These operations waste a considerable amount of time in manual data management. An engineer confided to us that he spent almost as much time managing and converting data for validation as he did working on the model itself.

Maturing modeling

The consideration of all these factors enables an evaluation of the global credibility of a model. In addition, it allows us to define avenues of improvement where we detect weaknesses. The breakdown of the model’s credibility into criteria is therefore also a way for organizations to implement a designated action plan in each of these areas.

In our experience, we can see that just thinking about the maturity of the models allows us to highlight avenues for improvement and to improve more efficiently and with less effort.

Rather than further refining the behavioral model or perfecting the convergence of the mesh, for example, we are convinced that more time should be spent on understanding boundary conditions or interfaces. These topics are typically elements that require testing!

Perhaps because they are outside the domain of confidence of simulation engineers, they are often the subject of strong hypotheses that should be verified more closely, even if it means confronting more complex problems. This will be necessary to build the credibility of the models.